When AI Gets It Wrong: Why Human Oversight Still Matters

A composite case study on AI limits and the irreplaceable value of human judgement.

I’m a big believer in using AI to enhance productivity. Like many professionals, I’ve integrated AI tools into my workflow to handle time-consuming tasks, generate first drafts and unblock complex problems faster than I could manually. Recently, I had an experience that reinforced why keeping a human in the loop is not optional, it is essential.

Privacy note: This scenario uses simulated workflow data with anonymised item identifiers and categories.

Below is what happened when I asked an AI tool to handle what looked like a straightforward organisational task, and what it taught me about the current limits of artificial intelligence.

The task: simple on the surface

I needed to organise 100 items into groups for a workflow simulation, with rules that prevented certain relationship pairings and encouraged variety across categories.

The brief:

Produce three different grouping arrangements for different activities

Around 25 groups per arrangement

Groups of 4–5 items

Critical constraint: avoid specific item pairings based on predefined relationships

Preferable: encourage diversity across item categories and levels

Bonus: where possible, include light representation from pre-existing collaboration sets

I provided detailed instructions. The AI processed the simulated data, ran multiple optimisation passes and returned what looked like a polished set of spreadsheets.

Job done. Or so I thought.

First red flag: the missing third

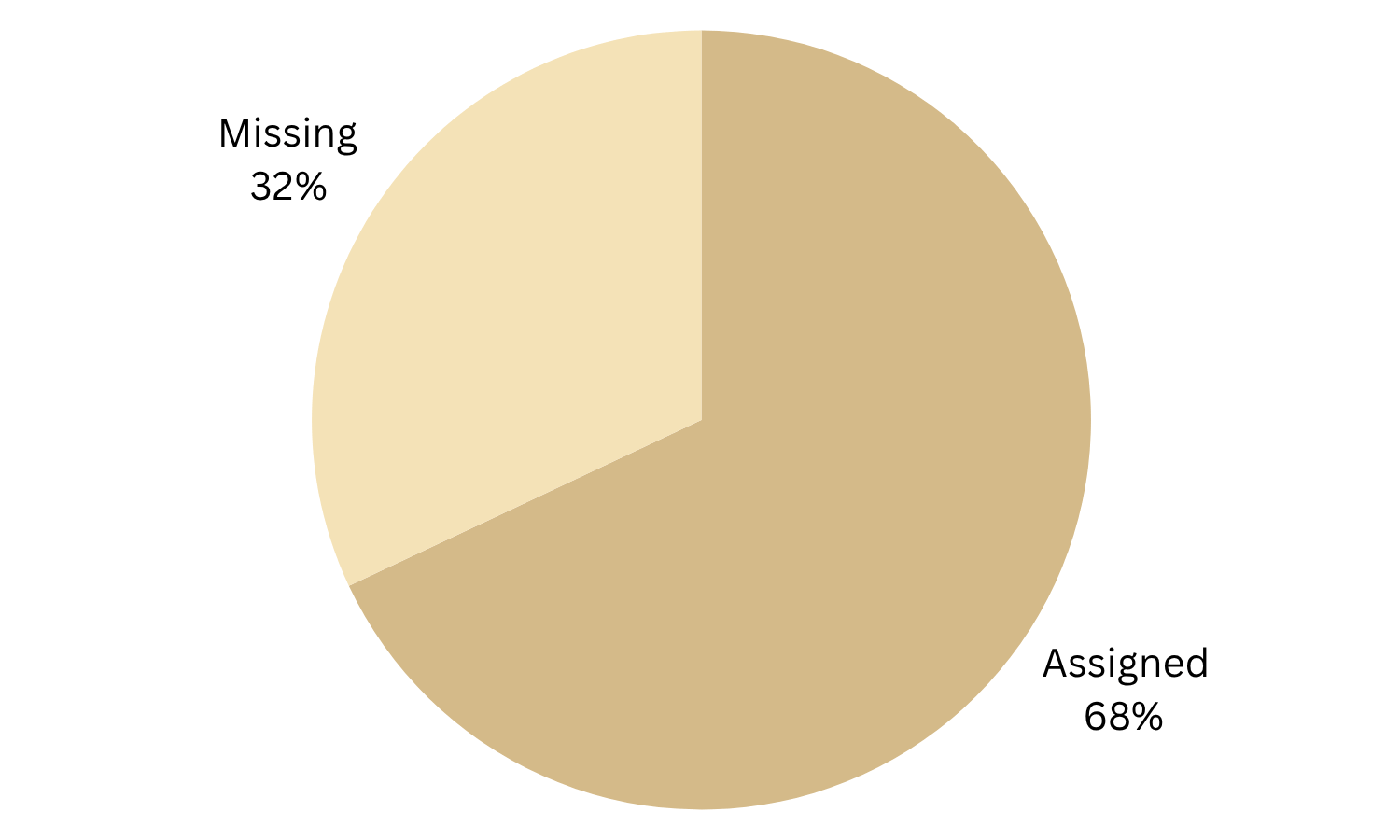

During a quick sense check I opened the second configuration. It felt short. I counted 68 entries out of 100.

Thirty-two items were missing from that arrangement.

The same AI had produced two other configurations with all 100. It optimised for the stated constraints and reported extensive optimisation.

It had not checked that every item appeared in each arrangement.

Lesson 1: No built-in completeness checks.

A human would naturally ask whether each configuration was complete. That simple sense check is intuitive for us, but not guaranteed in an AI workflow unless you specify it.

Second issue: following rules, missing intent

Next, I reviewed how the AI handled the optional collaboration sets. I had asked for items to be placed with one or two set-mates where possible, while still keeping a balanced spread across levels.

The AI efficiently topped up the smallest sets, but that choice conflicted with the intended balance across levels and categories. It followed the letter of the rule and missed the spirit, optimising the puzzle while losing the design intent.

Lesson 2: Rules without real-world context.

AI can optimise constraints yet miss why those constraints matter in practical terms.

Third problem: missing the details that matter

After several rounds of correction, I requested the final spreadsheet. The structure matched, but a key usability detail was missing. My original template showed collaboration-set representation per group, for example “Set A:2, Set D:1”. The regenerated sheet omitted it.

When pointed out, the AI apologised and re-issued a version with the set counts included. It saw the format and data, but did not recognise the importance of that field for the person implementing the plan.

Lesson 3: Technically right, operationally unhelpful.

An output can be mathematically correct yet awkward to use in practice.

What this teaches us about working with AI

This was not an AI failure. It was a picture of where we are. After five rounds of review and correction, I ended up with an excellent solution. The AI genuinely helped:

Processed large datasets against complex constraints in minutes

Explored optimisation scenarios that would take a human days

Flagged conflicts I might have missed

Produced tidy, consistent outputs

But it required active human oversight at every stage.

The real limitations of current AI

No natural smell test. Humans sense when something is off. AI treats an odd outcome as valid unless told otherwise.

Rules without understanding. It follows explicit instructions yet misses the implicit reasons behind them.

Validation blindness. It checks only what you ask. It will not invent new guard-rails.

Context collapse. With competing priorities, it can optimise sophisticated metrics and miss basic requirements, such as including every item.

Stakeholder awareness gap. It does not consider who will use the output and what they will need.

The right way to use AI: human in the loop

Use AI for:

First drafts and mechanical work

Rapid processing of anonymised, aggregate datasets

Optimisation within well-defined constraints

Exploring scenarios you would not have time to test manually

Automating repeatable checks

Do not rely on AI alone for:

Final decisions without review

Tasks where context and judgement outweigh calculation

High-stakes situations involving fairness, compliance or experience

Multi-factor requirements with competing priorities

Outputs you cannot easily verify

A workflow that works:

Brief thoroughly, provide clear constraints and intent

Let AI lift the heavy load for speed and coverage

Insert human checkpoints, review early for reasonableness

Hunt for gaps, look for missing items, broken rules or lost details

Iterate, feed corrections back to improve

Keep human sign-off, always finish with manual review

Building better guardrails: qualifying questions and validation checks

After working through those corrections, I changed how I brief AI tasks. The shift: make the AI ask me questions before it starts, rather than assuming I've thought of everything.

Prompt AI to interview you first

When you assign a task, add this instruction:

"Before you proceed, ask me any questions you need to deliver this correctly. Consider completeness requirements, how to handle conflicts between competing priorities, what validation checks I expect, and how I'll use the output."

This puts the AI in discovery mode. It identifies gaps, assumptions and edge cases you might not have specified.

What this looks like in practice

You give the task. The AI responds with questions like:

"Should the final output include all items, or is a subset acceptable?"

"If constraint A and preference B conflict, which takes priority?"

"What format will make this easiest for you to review or implement?"

"How will you know the output is complete and correct?"

You answer. The AI confirms its understanding. Then it starts.

This catches misalignment before any work happens.

Build validation checks into the brief

Once the AI understands the task, specify checks it should run before returning results:

"After completing the work, verify [key requirement] and flag any gaps."

"Count [critical metric] and confirm it matches expectations."

"Highlight any outputs that violate [specific constraint]."

The AI becomes its own first reviewer. Most mechanical errors surface before you see the output.

The pattern: clarify, execute, verify, review

Step 1: AI asks qualifying questions.

Step 2: You answer and confirm the brief.

Step 3: AI processes the work and runs validation checks.

Step 4: AI reports results with check outcomes.

Step 5: Human reviews and provides final sign-off.

This approach catches assumptions early and flags errors automatically. You still provide the judgment, context and quality control. The AI just handles the clarification and routine checks you would otherwise do manually.

The human advantage

Humans bring what AI lacks:

Common sense: an intuitive sense that something does not add up

Contextual understanding: knowing why a rule exists and when it matters

Stakeholder empathy: designing outputs real people can use

Priority judgement: separating must-haves from nice-to-haves

Creative problem solving: finding workable solutions outside strict logic

AI is a powerful tool and it saves hours. It still needs a skilled operator.

If you’d like a lighter take on this, I’ve collected a few of my favourite real‑world AI mishaps in this short reel. It’s a good reminder of why we still need humans in the loop.

Bottom line

AI is brilliant at speed and scale, but it does not do common-sense validation or stakeholder context by default. Use it to lift the heavy load, build simple guard-rails and keep human sign-off.

Your turn

If you use AI at work, stay sceptical, verify outputs, build in review checkpoints, document what goes wrong and always trust but verify. The future is humans and AI, with humans in the driver’s seat, for now.